Understanding how our mind organizes and accesses spatial information has been an ongoing challenge for scientists. Knowing where we are, what surrounds us and how to negotiate through our environment involves not only the issue of accessing data and memories stored in our brains, but also how our neurons fire together to carry out complex computations.

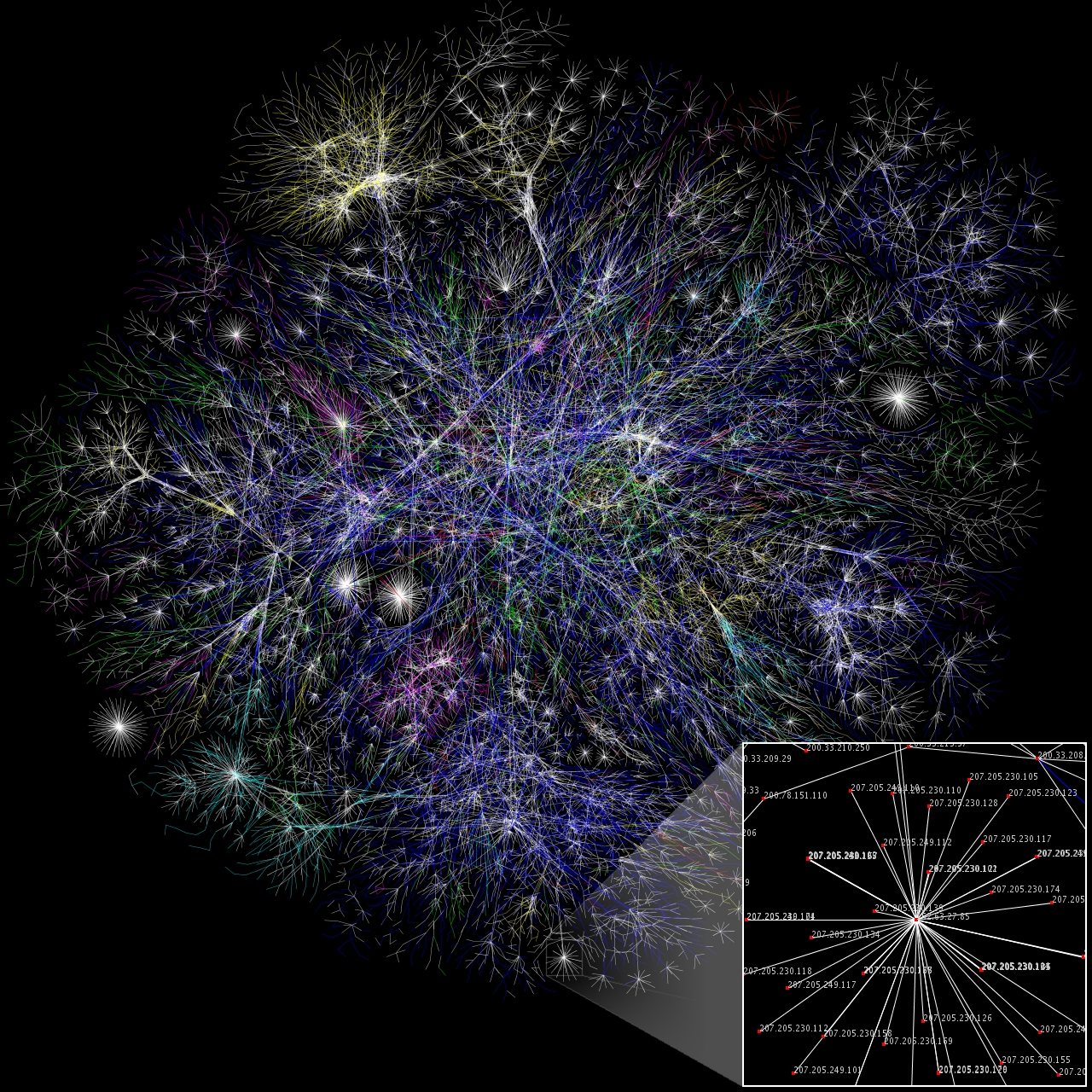

Since our billions of brain cells are connected to thousands of others, their study is much more complex than the analysis of individual structures. Fortunately for neuroscientists — who are constantly looking for ways to model brain function — artificial intelligence may provide insight into our own intelligence.

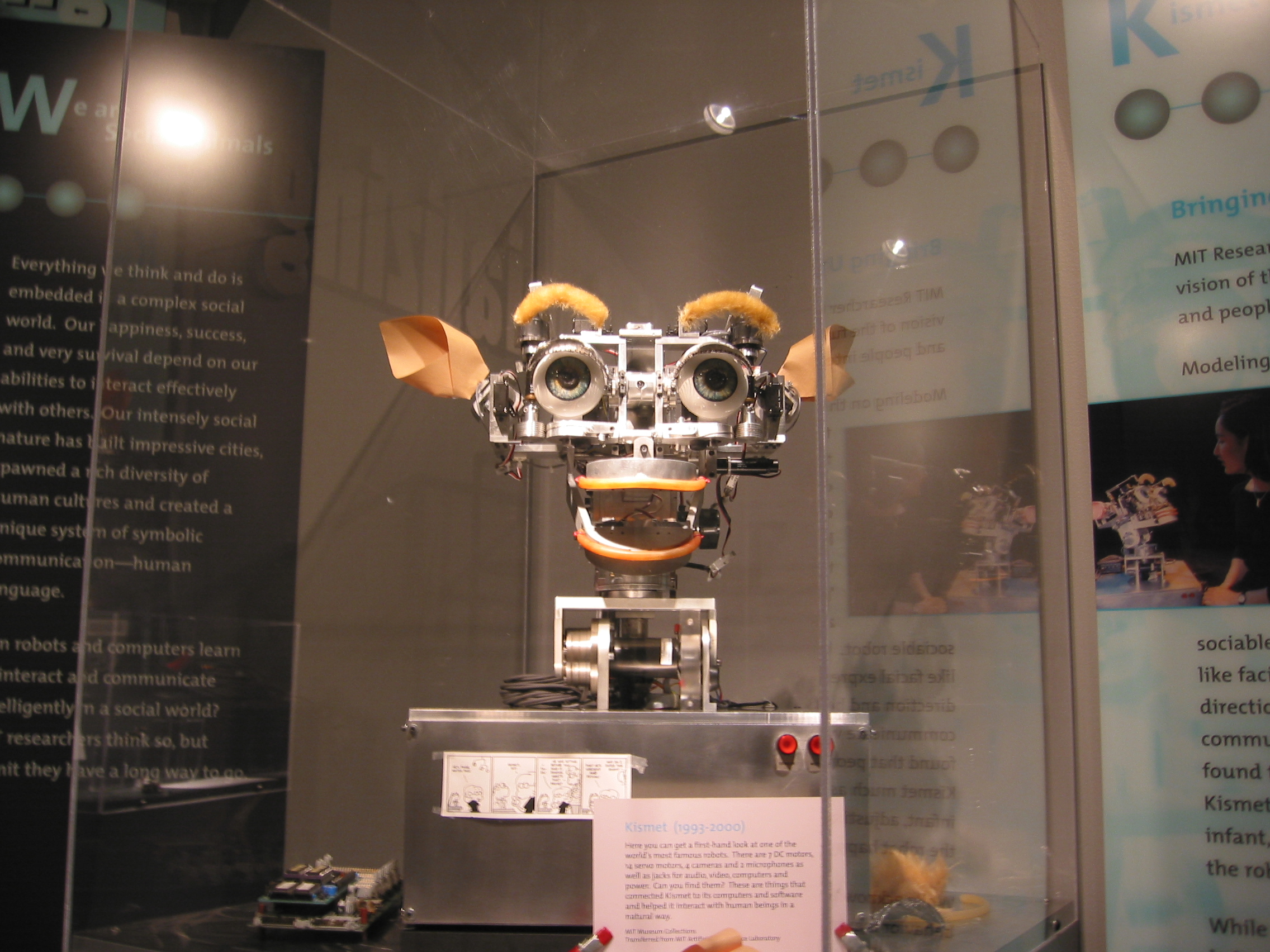

New research shows that transformers — one of the deep learning models used in automated systems — can help us better understand how computations are carried out by our own neural networks, and reveal untapped capabilities of the brain.

Grid cells: How our brain maps locations

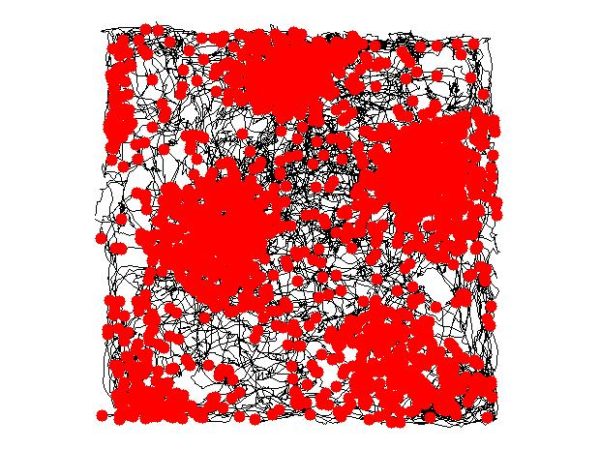

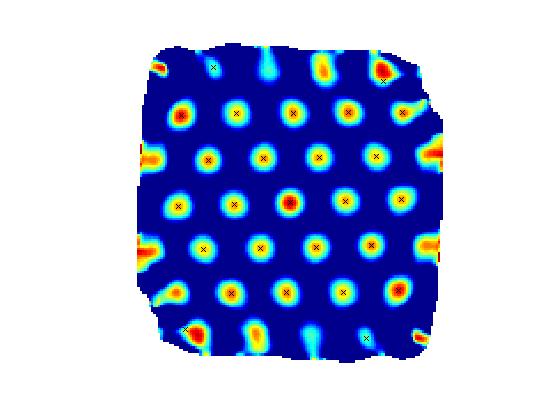

Grid cells are neurons that are responsible for processing and storing information about one’s position in space. As we navigate an open area, these brain cells fire at regular intervals to integrate information about location, distance and direction.

Located in the human hippocampus, grid cells get their name from the fact that connecting the centers of their firing fields forms a triangular grid — or honeycomb pattern — that tiles the space. The symmetrical arrangement of these “neural firing” maps is thought to be due to the brain’s efforts to create a cognitive representation of perceived spaces.

A fortunate similarity between the hippocampus and transformers

In their quest to optimize machine learning processes, experts found a practical similarity in the way transformers — a popular deep learning model — and the hippocampus — a brain structure key to memory — compute information.

Unlike other artificial neural networks, which process information as a linear sequence, transformers can process an entire input all at once. By employing “self-attention” (a mechanism which allows individual inputs to interact with one another), this model weights the significance of each part of the input data, making it possible to process a disordered flow of information and adapt to rapidly changing input — something most AIs cannot do.

Scientists believe that by developing and retooling transformers, it would be possible to better understand the functioning of artificial neural networks and, more importantly, grasp the complexity of seemingly effortless computations from real-life data that the brain performs.

Transformers and their main application

Success

You are now signed up for our newsletter

Success

Check your email to complete sign up

Transformers were first developed in 2017 by a Google Brain team as a new way for AI to process language. What made this model so innovative was that, instead of processing a sentence as a string of words, its self-attention mechanism could provide context for any position of the input sequence.

In practical terms, this meant that if a natural language sentence — such as a saying or a common expression — was received, the engine would process the sentence all at once instead of making sense of one word at a time. This resulted in the optimization of language processing and the reduction of machine training times.

Alternative applications: Memory retrieval

Since then, transformers have been employed in different fields with much success. One noteworthy application was proposed in 2020 by computer scientist Sepp Hochreiter, who, together with his team at the Johannes Kepler University in Linz (Austria), used a transformer to refine a memory retrieval model introduced forty years ago, known as Hopfield network.

Once transformers were incorporated into the Hopfield network, the scientists found that the mechanism’s connections became more effective, allowing it to store and retrieve more memories compared to the standard model. The model was then further enhanced by integrating the transformers’ unique self-attention mechanism into the Hopfield network itself.

Where natural symmetry becomes a rule

To take it a step further, scientists tried a different approach earlier this year. They modified the Hopfield network’s transformer so that, instead of treating memories as linear sets of data — as it typically would do with language — it would encode them as coordinates in higher-dimensional spaces.

The researchers were fascinated by the results. The pattern shown by the spatial coordinates was mathematically equivalent to the patterns exhibited by grid cells in the hippocampus of the brain. “Grid cells have this kind of exciting, beautiful, regular structure, and with striking patterns that are unlikely to pop up at random,” explained Caswell Barry, professorial research fellow at University College London.

The discovery hinted at the potential of AI to help us understand the human body, but also pointed to the amazing precision and intricacies of the brain that we have yet to comprehend. In fact, even the best-performing transformers only work well for words and short sentences and are far from matching the brain’s abilities to perform larger-scale tasks, such as storytelling.

While there are many limitations to AI, and grave concerns about its potential applications, its utility in helping us discover the depth of the miraculous workings of the human brain may be a step in the right direction.

READ ALSO: