Despite artificial intelligence (AI) chatbots and software soaring in popularity in recent months, some of them have earned a reputation for being unreliable sources of information. Now, a defamation lawsuit against OpenAI, the creators of ChatGPT, brings up more questions about the highly sought-after chatbot, the way it sifts and collects data, and whether its creators can be held accountable for some of the fabricated stories it generates.

While AI chatbots might be able to suggest a delicious recipe, workout regimen, or help you come up with a detailed travel itinerary, they falter when it comes to providing accurate details about historical events — often requiring fact-checking to ensure the information is accurate. In more extreme cases, AI-enabled equipment has even refused to listen to human commands, choosing instead to overwrite its own code during simulated tests.

MORE ON THE DEVELOPMENT OF ARTIFICIAL INTELLIGENCE & ITS PITFALLS:

- 300 People Listen To ChatGPT Deliver Sermon at Large German Protestant Conference

- AI Drones Ignored Human Commands, ’Killed‘ Human Controllers in Simulation, Says US Air Force Colonel

- Wendy’s To Begin Replacing Drive-thru Staff With Google-powered AI Chatbot

- IBM Freezes Hiring on Positions Such as Human Resources, Looking to Replace Them With AI

The twilight zone

Chatbots such as ChatGPT can struggle to distinguish between reality and fiction, sometimes inventing narratives spontaneously and presenting them as fact. Despite being able to help users simplify their workloads by writing emails, cover letters, and resumes in seconds, its propensity for crafting untruths when answering queries is becoming a growing issue.

The lawsuit centers around a journalist based in Georgia who was penning an article on a federal court case and used ChatGPT to summarize a provided PDF. Though the chatbot did provide a summary, it also included fabricated accusations against an unrelated third party: Mark Walters — an Atlanta-based radio host who is now suing OpenAI for defamation.

In the bot’s summary, it falsely accused Walters of misappropriating $5 million from a group advocating for the Second Amendment — a claim Walters had never previously faced.

Success

You are now signed up for our newsletter

Success

Check your email to complete sign up

Walters is consequently filing a lawsuit against OpenAI, alleging that its chatbot propagated “defamatory content” when it relayed the unfounded claims to the reporter, which potentially subjected Walters to harm to his reputation and potential public disparagement. Despite the fact that the journalist did not publish this information, it remains uncertain how Walters came across these allegations, as reported by The Verge.

Through the lawsuit, Walters is seeking an unspecified amount in general and punitive damages, as well as legal costs, which will be determined by a jury when the case goes to trial.

A double-edged sword

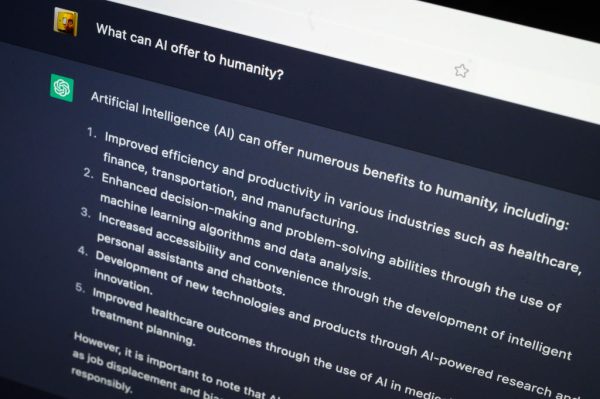

Launched in November 2022, ChatGPT is based on “transformer architecture,” which means it’s really good at understanding context and prompts, its makers say. The model has been trained on a broad range of data from the internet and other sources of information since 2021, but it doesn’t have access to personal data (unless explicitly provided during the conversation), and it also doesn’t store and share chats with third-party vendors or business entities.

While it’s clear that ChatGPT should not be inventing particulars about individuals — particularly in relation to federal charges and criminal offenses — it remains uncertain whether OpenAI can be held accountable for any damages.

ChatGPT itself has indicated that it is not a trustworthy source, a sentiment echoed by OpenAI in past statements and disclaimers. It’s also possible that this case could fall under Section 230, which regulates content appearing on a platform but not uploaded by the platform creator. However, it’s unclear if such regulations would apply to an AI-based system that generates its own content.

Ambiguity

“Using it for programming, and it’s annoying that it invents stuff,” says one user in a Reddit thread for r/ChatGPT, describing how ChatGPT couldn’t figure out how to correctly solve a basic coding problem. “I tried it seriously yesterday for the first time and was disappointed, it couldn’t even figure out a ‘one for loop’ problem both in TS and python.”

In the grand scheme of things, the lawsuit serves as the first of what could be many more AI-related legal disputes. The quick growth of these generative text services has effectively opened a can of worms, which the existing legal system currently lacks extensive safeguards or legal standards for.

As such, the responsibility falls upon the involved companies to determine what protective measures should be implemented, how to execute them, and how to prevent potential malicious use. To avoid common chatbot pitfalls, it’s important to fact check every piece of data it gives with reputable sources to avoid using inaccurate or wrong information, and avoid any legal issues in the process.