The world’s wealthiest man’s recent move to acquire Big Tech social media keystone Twitter appears to have exposed a long-running problem quietly writhing beneath the company’s apparently austere surface: swarms of bots posing as authentic, human users.

As Elon Musk undertakes the due diligence process on the acquisition, which was not expected to officially close until October, potentially fatal roadblocks have emerged.

On May 13, Musk announced on Twitter itself that the deal was temporarily on hold “pending details supporting calculation that spam/fake accounts do indeed represent less than 5% of users.”

In the tweet, Musk cited a May 2 Reuters article referencing recent Twitter filings that claimed only 5 percent or less of all “monetizable daily active users” (mDAU) were fake accounts.

In Twitter’s April 28 Q1 2022 Financial Statements, the company represented to shareholders and the public that the platform averaged 229 million mDAU, a 15.9 percent year-over-year increase.

Success

You are now signed up for our newsletter

Success

Check your email to complete sign up

On May 15, Elon doubled down in a reply to a video posted by a user cataloging a long list of followers to a certain account that all had characteristics of being bot accounts rather than human accounts, “Exactly. I have yet to see *any* analysis that has fake/spam/duplicates at <5%,” he said.

The same day, Musk went one step further with a situation quickly becoming a public relations nightmare for Twitter’s Board of Directors when he tweeted that, “There is some chance it might be over 90% of daily active users, which is the metric that matters to advertisers…Very odd that the most popular tweets of all time were only liked by ~2% of daily active users.”

How many is too many?

Musk’s claims appear to hold water. Also on May 15, audience research firm SparkToro released findings of a two day analysis that used machine learning to select 44,058 public-facing Twitter accounts from a prospective pool of 130 million active profiles.

The firm made a distinction between bot accounts that provide a service, such as those that repost restaurant findings or aggregate industry-specific articles into feed-style tweets, and “spam or fake accounts.”

The latter was described as engaging in activities such as “peddling propaganda and disinformation to those attempting to sell products, induce website clicks, push phishing attempts or malware, manipulate stocks or cryptocurrencies, and (perhaps worst) harass or intimidate users of the platform.”

THE INTERNET IS A BOTNET:

- When ‘Words With Friends’ Devolved Into ‘Words With Bots’

- Is Social Media Fake? Twitter Plagued by Artificial Intelligence Posing as Humans Pushing Communist China

- TikTok Learns User Desires in 40 Minutes, Curates Rabbitholed Content

- Instagram Use Is Harming the Mental Health of Young Girls, and Facebook Knows It: Report

The team found that of the 44,058 active profiles, 8,555 “have an overlap of features highly correlated with fake/spam accounts,” amounting to 19.42 percent, almost 4 times what Twitter represents in its regulatory filings.

Additionally the report utilized the same analytics model it used to measure former President Donald Trump’s account—before he was muzzled by Silicon Valley following the highly dubious Jan. 6, 2021 Capitol Riot—on Musk’s personal 93.4 million Twitter followers, finding that only 26.8 million are active accounts.

SparkToro concluded that “in total, 70.23% of @ElonMusk followers are unlikely to be authentic, active users who see his tweets.”

The firm did note they found the results “unsurprising,” however, because in their experience, very large accounts and those who generate a large amount of press coverage tend to have more fake and spam accounts.

Musk’s profile is recommended to new users by the Twitter algorithm is also a cause of the high fakes ratio, they said.

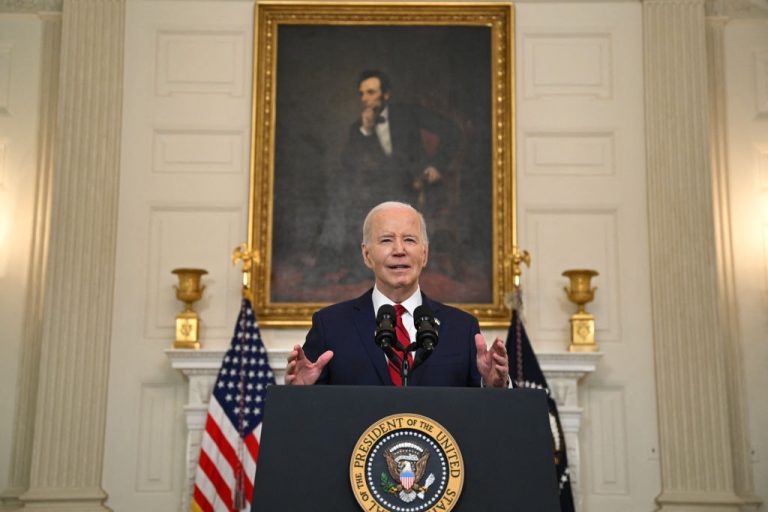

Additionally, according to a May 17 Newsweek article, SparkToro further concluded that 49.3 percent of all users following President Joe Biden’s official @POTUS account also meet their criteria for fake and spam accounts.

No longer a fringe issue

In the past, the notion that Twitter is really a seething swarm of fake accounts was considered to be somewhere between a conspiracy theory and isolated to certain fringe and relatively inconsequential aspects of the platform.

For example, in one instance in September of last year, security researchers found the Chinese Communist Party had deployed a botnet seeking to influence opinion on topics that few in the West are concerned about, such as GETTR investor Guo Wengui.

Additionally, researchers in August found that many of the CCP’s bot accounts used profile photos generated by the StyleGAN AI system, which creates authentic-looking human faces using a pixel drawing technique.

The tell was easy to catch, however, because StyleGAN’s deficiency is that it paints the eyes of everything it creates on the same horizontal axis.

But how common is the synthetic Twitterotti, really?

In June of 2020 antivirus and security firm Norton issued an advisory titled What’s a Twitter Bot and How To Spot One, which described bot—or “zombie”— accounts as “programmed to perform tasks that resemble those of everyday Twitter users — such as liking tweets and following other users — their purpose is to tweet and retweet content for specific goals on a large scale.”

“Twitter bots also can be designed for the malicious purposes of platform intimidation and manipulation — like spreading fake news campaigns, spamming, violating others’ privacy, and sock-puppeting,” added the advisory.

Norton issued a checklist of typical bot behavior that may alert human users that who they’re interacting with is fake, including:

- Tweeting all within the same narrow timeframe of each other

- Short replies that appear to be automated

- Accounts with recent creation dates

- Profiles with handles including a string of numbers

- Missing profile photos, biographies, and descriptions

- Users who follow a lot of accounts, but have few followers themselves

Meanwhile in 2018, Duo Security published a report titled Don’t @ Me that utilized machine learning to uncover a 15,000 account-strong botnet pushing a cryptocurrency scam.

Notably, Duo found that the botnet actually evolved over time in order to evade detection by Twitter moderators.

Command and control

Researchers from the University of Cal Poly Pomona published a technical paper to their website demonstrating how Twitter can be additionally used as a command and control hub to issue commands to external botnets that conduct activities such as denial of service attacks.

The authors said the purpose of the exercise was, “By demonstrating how a botmaster might perform such communication using online social networks, our work provides the basis to detect and prevent emerging botnet activities.”

The team stated that Twitter served the purpose well because it can issue commands “with low latency and nearly perfect rate of transmission.”

Developed in the prototype was a system to generate “plausible cover messages based on a required tweet length determined by an encoding map that has been constructed based on the structure of the secret messages.”

It also deployed a framework to automatically generate new account names.

The system was developed and deployed using Twitter’s official API, which is part of the company’s Twitter for Business platform, and the Twitter4J library.

Dirty spam

In 2018, a researcher from F-Secure stumbled upon a 22,000 member botnet after witnessing a series of suspicious likes in a short time frame to a tweet he had just made.

The analyst found that the profile descriptions of the curious accounts who had liked his post all carried suspicious phrasing such as “you love it harshly” and “waiting you at,” in addition to several suspicious looking shortened URLs

After having a colleague deploy a VPN to mask their location, upon switching their location to Finland, the URL took them to a site called “Dirty Tinder.”

“Checking further, I noticed that some of the accounts either followed, or were being followed by other accounts with similar traits, so I decided to write a script to programmatically ‘crawl’ this network, in order to see how large it is,” said researcher Andrew Patel.

Patel discovered that, “The discovered accounts seemed to be forming independent ‘clusters’ (through follow/friend relationships).”

“This is not what you’d expect from a normal social interaction graph,” he noted.

What was especially notable about Patel’s experience was that many of the botnet accounts had been created as long as six or eight years prior, often appeared to have different interests, and would tweet and like totally different topics at different times.