EDITOR'S PICK

-

‘The CCP is the greatest enemy of the Chinese nation’: Statements From the Tuidang Movement (March 2024)

-

Master Li Hongzhi: How Humankind Came to Be

China’s Overseas Product Dumping: Addressing Unfair Competition Caused by Systemic Differences

Faced with unfair competition brought about by systemic differences, countries around the world are right to impose tariffs and other barriers on Communist China

Latest

- Blinken in China: Calls for ‘Level Playing Field,’ Seeks Resolution Over Trade Disputes

- Moroccan Charm: A Timeless Journey Through Marrakech’s Ancient Medinas

- Taiwan to Remove Statues of Nationalist Chinese Leader Chiang Kai-shek

- Albany Gives NYC’s Top Cops $12k in Additional Pension Benefits as Adams Pushes Recruitment

- Innovation, Sustainability Shine at Natural Products Expo in California

FEATURED

-

US Considering Sanctions on Chinese Banks for Russia Dealings, But No Concrete Plans Yet

-

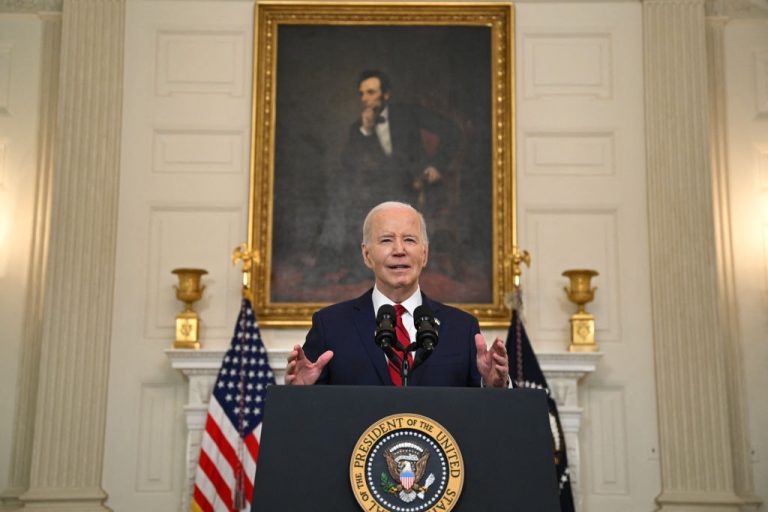

Biden Proposes Tripling Tariffs on Chinese Steel, Aluminum to Counter Beijing’s Product Dumping

-

US Puts Pressure on China as Ukraine War Escalates

-

Communist China Heads Down a Road of Isolation and Impoverishment

-

Chinese-American Artists Targeted in Planned NYT Piece That Would Misrepresent Falun Gong, Shen Yun Performing Arts

-

Shen Yun Artists Face Discrimination from Pro-CCP Official at US Customs Upon Return From European Tour

‘The CCP Does Not Represent China’: Falun Gong Practitioners Commemorate 25th Anniversary of Appeal in

On April 16, Sam Salehpour, a Boeing engineer turned whistleblower, told NBC News’ Tom Costello that all of Boeing’s 787 jets need to be grounded due to “fatal flaws” and that the entire fleet “needs attention.” His comments came one day ahead of his testimony before Congress where he told

-

Hundreds of Migrants Descend on New York City Hall Pleading for Work Permits, Green Cards

On April 16, hundreds of migrants — many believed to be illegal

-

US to Continue Sanctioning Iran to Disrupt its ‘Malign and Destabilizing Activity,’ Yellen Says

On April 16, U.S. Treasury Secretary Janet Yellen said Iran's attack on

-

‘Extraordinary artistry’: Shen Yun Delights Theatergoers in Sold-Out Lincoln Center Shows

NEW YORK, New York — After kicking off a highly anticipated 14-show

- ‘Very Positive Trends’: Number of Homicides Drops Significantly in the Big Apple

- US and Japan Forge Deeper Alliance to Counter China, Strengthen Economic Ties

- Controversial Geoengineering Experiment Launched Quietly in San Francisco to Avoid Public Backlash

- NY: Long Island Sen. Mario Mattera Tables Bill Addressing Squatting ‘Epidemic’

- Culinary Brilliance on Display at 87 Sussex: Jersey City’s New Dining Gem

- ‘An extension of their souls’: Shen Yun Continues to Wow at Lincoln Center

- ‘Beautiful!’: Shen Yun Wows Audiences in Multiple Sold Out Lincoln Center Performances

- NY: MTA Demands NYC Marathon Pay 750k Congestion Pricing Toll for Using Verrazzano Bridge

- Production Delays, Disarray in Boeing Factory Prior to Door Plug Near Tragedy, WSJ Reports

Recent research has surfaced suggesting a major change in the themes of Mandarin song lyrics — particularly those performed by and aimed at Chinese women. Where love and romance were once common, now there seems to be a call for freedom. According to a team led by Wenbo Wang, business

-

TikTok Surges in Vietnam Despite Increased Regulatory Pressures

TikTok, the global social media behemoth under Chinese company ByteDance, has seen a sizable spike in its market

-

Honoring Tradition: How Hong Kong’s ‘Noonday Gun’ Echoes Through History

Published with permission from LuxuryWeb Magazine Every day at noon in Hong Kong, a distinct ceremony unfolds —

Published with permission from LuxuryWeb Magazine Originating from the Caribbean island of Hispaniola, once a stronghold of some of the most infamous pirates — Henry Morgan, Blackbeard, Anne Bonny, Calico Jack, and Bartholomew Roberts — Ron Barceló Imperial Rum embodies a treasure that any 16th or 17th-century privateer would have

-

Charges Laid One Year After Historic Canadian Gold Heist

On April 17, at a news conference, Canada’s Peel Regional Police said

-

A Journey Through Morocco: How to Master the Region’s Delicious Culinary Traditions

Published with permission from LuxuryWeb Magazine Moroccan culinary arts are steeped in

-

Civil War in Sudan Enters 2nd Year: 15,000 Dead, 8 Million Displaced

The civil war in Sudan has been raging for over a year

- Peter Pellegrini Wins Slovak Presidential Election, Beating Pro-Western Opposition

- White House Directs NASA to Create Time Standard for the Moon

- Chinese Students Recount Horrifying Experience During Concert Hall Shooting in Moscow

- Japan Reverses Course, Increases Interest Rates for First Time in 17 Years

- Davide Scabin Rumored to Bring Culinary Genius Back to Turin, Italy

- Hong Kong Passes Infamous ‘Article 23’ in Show of Growing Communist Hold

- A Global Guide to Sweet Wines: From Europe to North America

- Trafficked Cambodian Teen Saved Following Facebook Plea

- Argentinian Austerity Measures Show Mixed Results as Inflation Remains High

Can cliffs lay eggs? That is what the cliff at the base of Mount Gandang in China's Guizhou province seems to have done for centuries. This egg-laying cliff produces smooth orbs similar to bird eggs; but since they are composed of metamorphic rock, they are a thousand times heavier. At

-

East Coast Earthquakes – What to Know

In response to a rare 4.8 magnitude earthquake, followed by multiple, smaller aftershocks, many East Coast natives are grappling with earthquake awareness for the first time. Epicentered near Gladstone, New Jersey, slight tremors were felt as far south as northern Virginia and north to the border of New Hampshire. No

In response to a rare 4.8 magnitude earthquake, followed by multiple, smaller aftershocks, many East Coast natives are grappling with earthquake awareness for the first time. Epicentered near Gladstone, New Jersey, slight tremors were felt as far south as northern Virginia and north to the border of New Hampshire. No

-

‘A new breath of life’: Shen Yun’s Opening Night at Lincoln Center Met With Resounding Acclaim

NEW YORK, New York — On April 3, Shen Yun kicked off its highly anticipated 14-show run at

-

A Holistic Approach to Histamine Intolerance

Do you suffer chronic symptoms that can’t be pinned down to any specific cause? Gastrointestinal disorders, headaches, hives,

Sprightly and tenacious, feverfew is a member of the world’s largest and most diverse plant family — Asteraceae. Native to Asia and Europe, feverfew was first introduced to the United States in the 19th century. It is now commonly grown as a perennial in hardiness zones 5-10. Its small, prolific

-

The Power of Optimism – The Key to Longevity and Health

Life is hard, and staying positive is even harder. There are those who, despite sporting the scars of

-

Food Allergies – Why We Get Them, and How to Alleviate Them Naturally

Food allergies are an increasingly common dietary fret that can make dining out a risky affair. They occur

In ancient China, women were educated and raised to uphold a range of traditional values, which could be summed up as virtue (德), righteousness (義), propriety (禮), benevolence (仁), and trustworthiness (信). From noblewomen to commoners, many women of ancient China left their names in history for embodying these timeless

-

‘Absolutely brilliant’: Theatergoers Marvel at Shen Yun Performance in New Jersey

NEW BRUNSWICK, New Jersey — On March 31, Shen Yun concluded a five-show run at the prestigious State

-

US Officials Celebrate Shen Yun’s Return to Lincoln Center With Letters of Proclamation

As Shen Yun Performing Arts gears up for its highly anticipated return to New York's prestigious Lincoln Center