EDITOR'S PICK

-

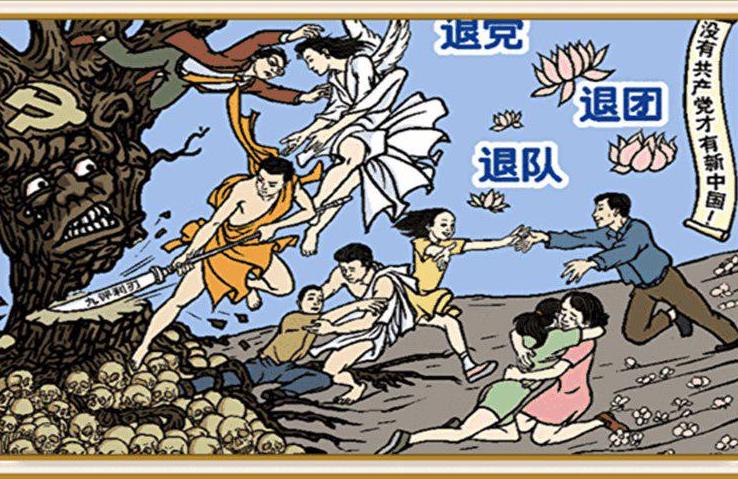

‘The CCP is the greatest enemy of the Chinese nation’: Statements From the Tuidang Movement (March 2024)

-

Master Li Hongzhi: How Humankind Came to Be

‘China has been poisoned for over a century’: Statements From the Tuidang Movement (April 2024)

Latest

- ‘Undermines our integrity’: Kenneth Chiu Addresses Voter Fraud in NYS Assembly Race

- Spain Ends Traditional Bullfighting Award Amid Cultural Shift, Animal Advocacy

- Jose Perez: A Culinary Visionary Transforming American Dining

- TikTok Sues to Overturn US Law Forcing Divestment From ByteDance

- NY: Dozens of Jewish Sites Receive Disturbing Bomb Threat Text

FEATURED

-

EU Leaders Meet Xi Jinping in Paris, Express Concern Over Beijing’s Trade Practices

-

How the CCP Inflates China’s GDP

-

Pro-CCP Sogavare Steps Down as Leader of the Solomons, Dealing Major Blow to Beijing

-

‘The CCP Does Not Represent China’: Falun Gong Practitioners Commemorate 25th Anniversary of Appeal in Beijing

-

US Considering Sanctions on Chinese Banks for Russia Dealings, But No Concrete Plans Yet

-

Biden Proposes Tripling Tariffs on Chinese Steel, Aluminum to Counter Beijing’s Product Dumping

Destruction of Ancestral Temple in Southern China Brings 4,000 People Out in Protest

Lifestyle

-

Mind Over Matter: Train Your Brain and Transform Your Life

-

New York’s Hidden Treasure – Beautiful, Ethical and Economical Herkimer Diamonds

After Eight Mother’s Days Without Her, I Appreciate My Mom More Than Ever

Being a long-distance daughter has taught me valuable lessons about the essence of motherhood.

POLICY & POLITICS

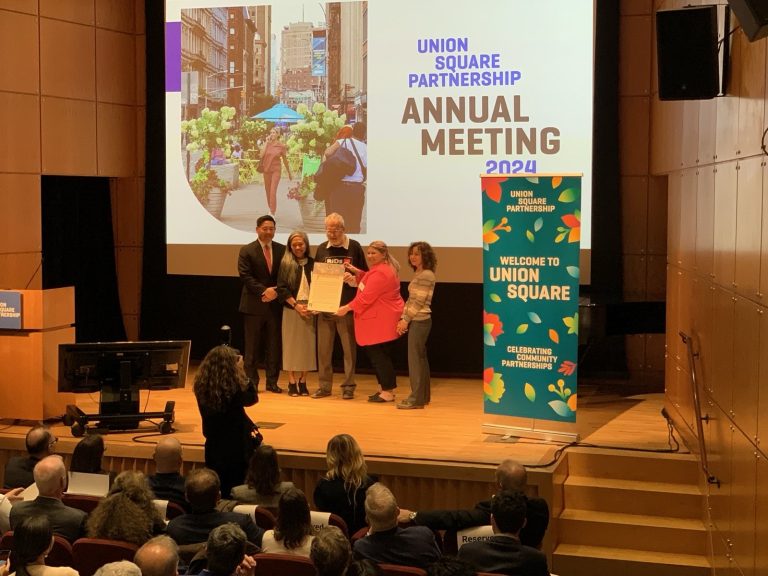

NEW YORK, New York — In a major boost to local economies, Small Business Services Commissioner Kevin D. Kim sat down with Vision Times on May 6 to talk about the exciting new initiatives that will bolster the city’s Business Improvement Districts (BIDs) and community-based organizations across the region. With

-

NY: This Broadway Hotel Has Been Quietly Operating as a Migrant Shelter for Over a Year

A hotel, in the heart of Broadway, has been quietly operating as

-

Thousands Arrested as Student Protests Spread, Grow Violent

Student protests in support of Palestine that erupted in April on college

-

Meet Kenneth Paek: Veteran, Policeman, and Advocate Vying for NY’s 25th Assembly

OAKLAND GARDENS, New York — As residents in New York gear up

- Oil Prices Set for Steepest Weekly Drop in Three Months

- In China, Blinken Lays Out America’s Red Lines on Russia, Other Tensions

- Arizona Rancher George Alan Kelly Will Not Be Retried for Death of Mexican Migrant

- American Taxpayers Paying $451 Billion Annually to Address Southern Border Crisis

- ‘It moved my spirit’: See Why Theatergoers Are Enchanted With Shen Yun

- Blinken in China: Calls for ‘Level Playing Field,’ Resolution Over Trade Disputes

- Biden Signs Law Granting Massive $95 Billion Foreign Aid Package, Passes TikTok Bill

- Albany Gives NYC’s Top Cops $12k in Additional Pension Benefits as Adams Pushes Recruitment

- Innovation, Sustainability Shine at Natural Products Expo in California

On Friday, May 3, a new incursion of Communist Chinese military aircraft was detected by the Taiwan Defense Ministry in the Taiwan Strait area, with airplanes crossing the median line between the island and continential Asia. Beijing reported that its People’s Liberation Army (PLA) Navy carried out combat exercises with

-

Highway Collapse in Southern China Kills 48 as Poor Weather Hampers Rescue Efforts

In the early hours of May 1, disaster struck southeastern China when a section of a four-lane highway

-

Tibetans Who Sold Their Homes to Chinese Buyers Say Compensation Was Too Low

After selling their land to Chinese businessmen, Tibetan families are now reporting that they are not being compensated

On Monday, May 7, the Russian military issued the deployment of tactical nuclear weapons as part of a drill in which Moscow says is a response to threats from France, Britain, and the United States. Russia has repeatedly warned of rising nuclear risks since it invaded Ukraine in 2022. Washington

-

Australia’s Defense Minister Denounces China Over Aerial Confrontation

Australia’s defense minister Richard Marles said in a statement on May 6

-

Flooding in Southern Brazil Claims at Least 39 Lives After Torrential Rains

Brazilian authorities of the country's southernmost Rio Grande do Sul state said

-

Pandeli Locandasi: A Culinary Beacon Above Istanbul’s Vibrant Spice Bazaar

Published with permission from LuxuryWeb Magazine Nestled above the main entrance of

- Moroccan Charm: A Timeless Journey Through Marrakech’s Ancient Medinas

- Taiwan to Remove Statues of Nationalist Chinese Leader Chiang Kai-shek

- Caribbean Treasure: Discovering the Rich Heritage of Ron Barceló Imperial Rum

- China’s Overseas Product Dumping: Addressing Unfair Competition Caused by Systemic Differences

- Charges Laid One Year After Historic Canadian Gold Heist

- A Journey Through Morocco: How to Master the Region’s Delicious Culinary Traditions

- South Korea’s President Yoon and Ruling Party Suffer Legislative Election Loss in Setback for Anti-Communist Politics

- US to Continue Sanctioning Iran to Disrupt its ‘Malign and Destabilizing Activity,’ Yellen Says

- TikTok Surges in Vietnam Despite Increased Regulatory Pressures

We have all experienced so called "gut feelings" – when we suddenly feel uneasy about something that rationally seems fine or, conversely, have firm confidence in something that has a high risk of failure. These puzzling flashes of insight are also referred to as intuition, hunches or sixth sense. They

-

Baoding Balls – They Do Much Better Than Bounce

Baoding balls may have been invented in China’s city of the same name, located in Hebei Province, but the Chinese characters reveal another layer of meaning. Bao (保) carries the meaning “to protect, defend, or ensure,” while ding (定) usually means “to fix, or make definite.” In China, these specialized

Baoding balls may have been invented in China’s city of the same name, located in Hebei Province, but the Chinese characters reveal another layer of meaning. Bao (保) carries the meaning “to protect, defend, or ensure,” while ding (定) usually means “to fix, or make definite.” In China, these specialized

-

For Powerful Traditional Remedies, Know and Grow Medicinal Herbs (G) Geranium

Geraniums are among the long list of baffling botanical nomenclature. While the beautiful showy flowers that are purchased

-

The Sweet Satisfaction of Making Peace With Dessert

While people have been enjoying sweets for thousands of years, the concept of dessert has gradually evolved from

Do you suffer chronic symptoms that can’t be pinned down to any specific cause? Gastrointestinal disorders, headaches, hives, congestion, and itchy eyes can all be caused by histamine intolerance. Like gluten intolerance a couple decades ago, this condition is just gaining recognition and becoming a topic of study. Because it

-

For Powerful Traditional Remedies, Know and Grow Medicinal Herbs (F) Feverfew

Sprightly and tenacious, feverfew is a member of the world’s largest and most diverse plant family — Asteraceae.

-

The Power of Optimism – The Key to Longevity and Health

Life is hard, and staying positive is even harder. There are those who, despite sporting the scars of

Recent research has surfaced suggesting a major change in the themes of Mandarin song lyrics — particularly those performed by and aimed at Chinese women. Where love and romance were once common, now there seems to be a call for freedom. According to a team led by Wenbo Wang, business

-

‘Extraordinary artistry’: Shen Yun Delights Theatergoers in Sold-Out Lincoln Center Shows

NEW YORK, New York — After kicking off a highly anticipated 14-show run at the prestigious David H.

-

The Fascinating Process Behind the Beauty of Natural Fibers (Part VIII): Pineapple

In our exploration of natural fibers we again return to South America to visit another dual-purpose crop. Believe