A form of artificial intelligence that clones voices was used to manipulate a bank manager into conning a company in the United Arab Emirates out of US$35 million, according to court documents.

On Oct. 13, the U.S. Department of Justice filed for an ex parte order in the United States District Court for the District of Columbia involving a criminal money laundering case in the UAE that occurred in January of 2020.

The filing, which is sparse in details, states that a branch manager for a bank in Dubai received a call that appeared to be from a client, the Director of an unnamed company, at the same time as several emails that appeared to originate from the same executive and his company’s attorney.

The manager was told by the voice, which they recognized as that of the Director, that the company was about to make a business acquisition and needed to move the $35 million to multiple accounts in coordination with their lawyer, U.S.-based Martin Zelner.

It turns out the voice on the other end of the line, according to the DOJ, was a “‘deep voice’ technology used to simulate the voice of the Director.”

Success

You are now signed up for our newsletter

Success

Check your email to complete sign up

The money was transferred to bank accounts residing in multiple countries “in a complex scheme involving at least 17 known and unknown defendants.” UAE investigators found two transactions, totalling slightly more than $415,000 USD travelled through two accounts at U.S. based Centennial Bank.

The application is to compel Centennial to provide “bank records and information” pertaining to the transactions.

An Oct. 14 article by Forbes said neither the Dubai Public Prosecution Office nor Martin Zelner had responded to press inquiries in the case.

In 2019, The Wall Street Journal reported on a related case where deep voice AI was used to defraud a UK energy company out of 220,000 pounds when the technique was used to convince the CEO of a UK subsidiary that the German CEO of his firm’s parent company was on the line, adamant about having the transfer processed within the hour.

The Journal says the fooled executive “recognized his boss’ slight German accent and the melody of his voice on the phone.”

The article says the frausters got greedy as they went in for the kill, “The attackers responsible for defrauding the British energy company called three times…After the transfer of the $243,000 went through, the hackers called to say the parent company had transferred money to reimburse the U.K. firm.”

“They then made a third call later that day, again impersonating the CEO, and asked for a second payment. Because the transfer reimbursing the funds hadn’t yet arrived and the third call was from an Austrian phone number, the executive became suspicious. He didn’t make the second payment.”

A distorting trend

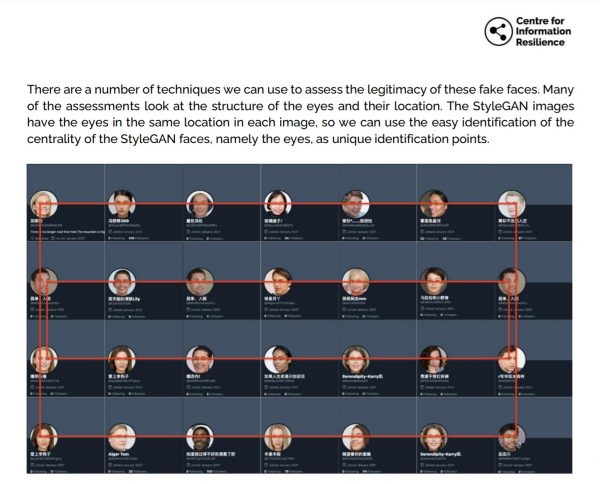

Deep fake AI cognitive warfare and attacks are becoming ever more frequent. In August, the Centre for Information Resilience published a report showing how the Chinese Communist Party had utilized a botnet of fake Twitter accounts to disseminate influence in both the Chinese and English language on the topics of the persecution of Uyghur Muslims, the Coronavirus Disease 2019 pandemic, and gun control and racial justice issues in the United States.

Researchers found one of the most prevalent tells was the usage of StyleGAN (Generative Adversarial Networks) created profile photos. One of the strongest tells that the photos were GAN-generated and not of real humans is that the eyes of each picture were always on the same horizontal axis, a fact made painfully clear as the report supported its thesis.

In March, TikTok account DeepTomCruise? published a trio of videos using AI-generated deep fake technology appearing to show the Hollywood star swinging a golf club, doing a magic trick, and talking about meeting Mikhail Gorbachev.

To the naked eye, the clips were very convincing. Their only tells of inauthenticity were found in careful analysis when the video was slowed down, showing that the fake’s sunglasses disappeared for a few frames after being moved in front of the head.

Cognitive Warfare

A November of 2020 report by Information Hub titled Cognitive Warfare, which describes itself as a study sponsored by NATO’s Allied Command Transformation, talks about how the nature of the technique is to erode the public’s trust, “Social engineering always starts with a deep dive into the human environment of the target. The goal is to understand the psychology of the targeted people.”

The report continues, “This phase is more important than any other as it allows not only the precise targeting of the right people but also to anticipate reactions, and to develop empathy. Understanding the human environment is the key to building the trust that will ultimately lead to the desired results.”

“Cognitive warfare pursues the objective of undermining trust (public trust in electoral processes, trust in institutions, allies, politicians…) therefore the individual becomes the weapon, while the goal is not to attack what individuals think but rather the way they think.”

“It has the potential to unravel the entire social contract that underpins societies,” states the author.